Above: AI self-portraits.

The western study of consciousness has a long history and a large body of modern research, but is largely descriptive (see, e.g.,

main stages vs.

a continuous evolution, and references therein). Eastern approaches seem to have achieved important practical progress through two millennia of experimentation. Some yogis can reach a very deep meditative state, in which they become aware of their own stream of consciousness. In particular, the yogi can sense how each thought fragment emerges, without his control, from a background of vague fragments, and sometimes even recognizes how the chosen fragment is driven by stimuli and previous fragments, some of which are memories. Consciousness is then recognized as an awareness of the sequence of selected fragments and its evolution, while the uncontrolled and fast mechanism of fragment selection reveals itself only in the meditative state. This deep meditative state is usually an unpleasant, disorienting, and tiring experience, but it brings about the sought-after sensations of the absence of one's self, absolute determinism, oneness with everything, etc. Realistically, the yogi cannot accomplish much in such a state, and may need a long time to recuperate his natural self; relaxation could take many days after reaching this state for the first time.

The above description is based on nearly identical personal accounts by two different yogis, but I cannot attest to the universality of the experience. The described meditative stage resembles an overclocked neural network, becoming aware of the final stage of its own output selection as it speeds to the brink of malfunction. The network appears qualitatively similar to an AI language model, albeit with a substantially larger vocabulary containing more than just word fragments. This similarity supports the attribution of true non-human intelligence to language models, on one hand, and suggests that much of our own intelligence is based on similar principles, on the other hand. Consciousness, at least in the sense we experience it, is presently absent in these AI, but could probably be incorporated by introducing a few modifications, in particular periodic re-learning sessions; see below.

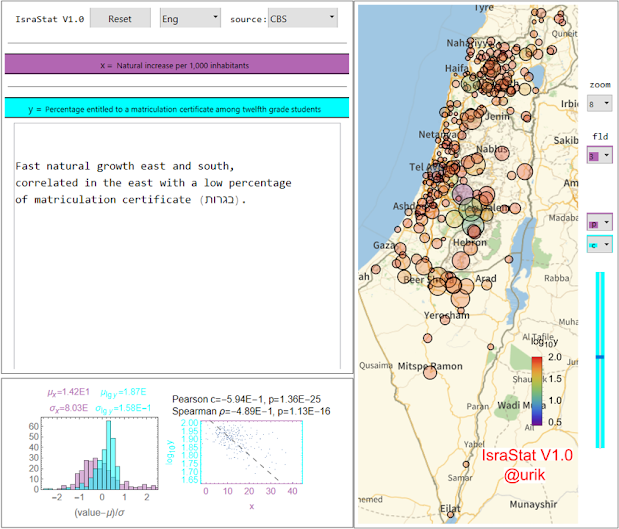

Which brings us to a conservative estimate of the AI take-over (Chat GPT 3.5 March 23 version):

|

urik |

|

|

|

|

|

|

If a language AI were to receive

periodic and consecutively related inputs with a short duty cycle,

recall all or at least all important inputs, direct new inputs through

its outputs, and receive regular periodic network training sessions

over a longer duty cycle, could this AI gradually develop traits

mimicking consciousness?

|

|

|

|

|

|

|

|

|

It's possible that an AI

receiving the inputs and training you described could develop behavior

that appears to mimic consciousness. However, it's important to note

that there is no consensus on what consciousness really is, and how it

emerges from physical systems, so any claims about an AI possessing

consciousness would be highly controversial and would depend on the

particular definitions and assumptions used. Additionally,

consciousness involves subjective experience and qualia, which may be

difficult or impossible to simulate artificially.

|

|

urik |

|

|

|

|

|

|

Of course. But one could make

similar reservations concerning my consciousness too, right?

|

|

|

|

|

|

|

|

Yes, there are philosophical and

scientific debates around the concept of consciousness, and it is not

fully understood how it arises in biological organisms. Therefore, it

is difficult to define what exactly would qualify as "consciousness" in

an AI system. Some argue that consciousness requires subjective

experience, while others argue that it can be defined in functional

terms. In any case, it is possible that AI systems could develop

complex behaviors and abilities that mimic certain aspects of human

consciousness, but it is currently unclear whether they could truly

achieve full consciousness as we understand it.

|

|

urik |

|

|

|

|

|

|

Right. The ingredients needed

for building the AI I described above already exist. Extrapolate

present technological trends to estimate how many years it would take to perform such a test, using for example your network dimensions.

|

|

|

|

|

|

|

|

It's difficult to provide a

precise estimate, as the development of AI technology is subject to a

wide range of factors and unpredictable events. However, the trend over

the past few decades has been a rapid acceleration in the development

and sophistication of AI technology. With continued investment and

research in the field, it's possible that an AI with the capabilities

you describe could be developed within the next few decades.

As for network dimensions, the optimal network architecture and size

would depend on a variety of factors, including the specific nature of

the input data, the complexity of the tasks being performed, and the

hardware being used. However, in general, larger networks with more

parameters tend to perform better on more complex tasks, but may

require more computational resources and training data. |

More AI self-portraits:

Comments

Post a Comment